FashionMNIST tutorial

Fashion-MNIST is a dataset of Zalando's article images, featuring a training set containing 60,000 instances and a test set of 10,000 examples. Each image measures 28 pixels in both height and width, resulting in a total of 784 pixels. Every pixel is assigned a single pixel-value representing its lightness or darkness, with higher values indicating darker shades. These pixel-values are integers ranging from 0 to 255. Both the training and test datasets consist of 785 columns.

In this tutorial, we use Covalent to train a Neural Network (NN) using PyTorch. All the computations will be executed on Covalent Cloud for the total expense of ~$0.50

Getting started

The tutorial requires installing Covalent, Covalent Cloud, and torchvision.

with open("./requirements.txt", "r") as file:

for line in file:

print(line.rstrip())

covalent

covalent-cloud

torchvision==0.17.0

plotly==5.18.0

Uncomment and run the following cell to install the libraries.

# Installing required packages

# !pip install -r ./requirements.txt

Import Covalent, Covalent Cloud and PyTorch.

import covalent_cloud as cc

import covalent as ct

import torch

import torch.nn as nn

import torch.optim as optim

from torchvision.transforms import ToTensor

from torch.utils.data import DataLoader

from torchvision.datasets import FashionMNIST

import plotly.express as px

Create the Covalent Cloud environment, where the execution happens.

cc.save_api_key("YOUR-API-KEY")

cc.create_env(

name="fashionmnist",

conda={

"dependencies": ["python=3.10", "libstdcxx-ng"]

}, pip=["torchvision==0.17.0", "plotly", "pandas"],

wait=True

)

Executors allocate compute resources and are attached to environments.

cpu_executor = cc.CloudExecutor(

env="fashionmnist",

num_cpus=2,

memory="8GB",

time_limit="1 hour"

)

FashionMNIST classifier

We will build a classifer on the FashionMNIST dataset. A simple neural network will be used.

class SimpleNN(nn.Module):

def __init__(self):

super(SimpleNN, self).__init__()

self.fc1 = nn.Linear(28 * 28, 128)

self.relu = nn.ReLU()

self.fc2 = nn.Linear(128, 10)

def forward(self, x):

x = x.view(-1, 28 * 28)

x = self.fc1(x)

x = self.relu(x)

x = self.fc2(x)

return x

Next we define a dataloader, which can automatically download and transform data.

@ct.electron(executor=cpu_executor)

def data_loader(

batch_size: int, train: bool, download: bool = True,

data_dir: str = "./data/", shuffle: bool = True,

):

data = FashionMNIST(

root=data_dir, train=train, download=download, transform=ToTensor()

)

return DataLoader(data, batch_size=batch_size, shuffle=shuffle)

Model training is where we reuse our SimpleNN and define how it is optimized using the FashionMNIST data.

@ct.electron(executor=executor)

def train_model(dataloader, num_epochs: int, learning_rate: float):

model = SimpleNN()

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(model.parameters(), lr=learning_rate)

losses = []

# Training the model

for epoch in range(num_epochs):

model.train()

running_loss = 0.0

for inputs, labels in dataloader:

optimizer.zero_grad()

outputs = model(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

running_loss += loss.item()

print(

f"{epoch+1}/{num_epochs}: loss {running_loss/len(dataloader)}"

)

losses.append(running_loss / len(dataloader))

# save model to volume

model_folder = Path("/volumes") / "models"

model_folder.mkdir(exist_ok=True)

model_file_path = model_folder / "model.pth"

torch.save(model.state_dict(), model_file_path)

return model_file_path, losses

The model is then evaluated on

@ct.electron(executor=executor)

def test_model(dataloader, model_file_path):

# load model from volume

model = SimpleNN()

model.load_state_dict(torch.load(model_file_path))

model.eval()

correct = 0

total = 0

with torch.no_grad():

for inputs, labels in dataloader:

outputs = model(inputs)

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

accuracy = correct / total

print(f"Accuracy on the test set: {accuracy:.4f}")

return accuracy

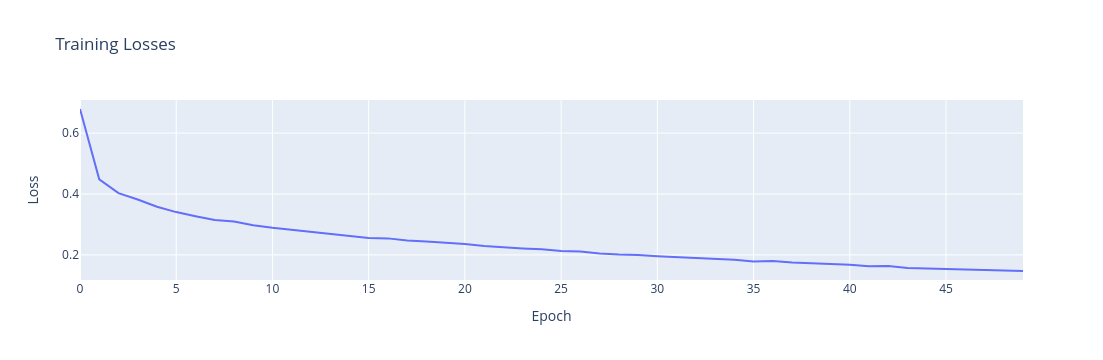

Next, we may plot the training loss over epochs

@ct.electron(executor=executor)

def plot_losses(losses):

return px.line(

y=losses, title="Training Losses", labels={"y": "Loss", "x": "Epoch"}

)

Finally, we put everything together to create the lattice.

@ct.lattice(workflow_executor=executor, executor=executor)

def workflow(train_batch_size: int, test_batch_size: int, epoch_num: int = 5):

train_loader = data_loader(train_batch_size, train=True)

test_loader = data_loader(test_batch_size, train=False, shuffle=False)

model_path, losses = train_model(

train_loader, num_epochs=epoch_num, learning_rate=0.001

)

accuracy = test_model(test_loader, model_path)

fig = plot_losses(losses)

return accuracy, fig

Alternatively, we may define a different executor and apply it on the training step to speed up training.

gpu_executor = cc.CloudExecutor(

env="fashionmnist", num_cpus=2,

memory="8GB", time_limit="1 hours",

num_gpus=1, gpu_type="v100",

)

train_model = ct.electron(train_model, executor=gpu_executor)

Running Workflow

Finally, we can now execute (dispatch) the workflow. We use Covalent’s volume feature to persist the trained model and make it available after the execution.

volume = cc.volume("/models")

dispatch_id = cc.dispatch(workflow, volume=volume)(

train_batch_size=256, test_batch_size=64, epoch_num=50

)

Then, you can go to your Covalent Cloud account, locate your workflow and view the execution graph.

You may then retrieve the result by doing:

result = cc.get_result(dispatch_id)

result.result.load()

accuracy, fig = result.result.value

Opening the fig object should render the training loss curve.

Conclusion

In this guide, we utilized a sequence of procedures to create a FashionMNIST classifier, all within the Covalent Cloud platform. We've demonstrated the simplicity of experimenting with various hyperparameters, seamlessly transitioning between CPU and GPU devices, saving your trained models all without having to leave the comfort of your Jupyter Notebook interface. If you execute the provided workflow using a GPU node (v100) and trained for 50 epochs, the total price will be ~$0.50.

The full code can be found below.

Full Code

import covalent as ct

import covalent_cloud as cc

import torch

import torch.nn as nn

import torch.optim as optim

from torchvision.transforms import ToTensor

from torch.utils.data import DataLoader

from torchvision.datasets import FashionMNIST

import plotly.express as px

from pathlib import Path

class SimpleNN(nn.Module):

def __init__(self):

super(SimpleNN, self).__init__()

self.fc1 = nn.Linear(28 * 28, 128)

self.relu = nn.ReLU()

self.fc2 = nn.Linear(128, 10)

def forward(self, x):

x = x.view(-1, 28 * 28)

x = self.fc1(x)

x = self.relu(x)

x = self.fc2(x)

return x

cc.save_api_key("YOUR-API-KEY")

cc.create_env(

name="fashionmnist",

conda={

"dependencies": ["python=3.10", "libstdcxx-ng"]

}, pip=["torchvision==0.17.0", "plotly", "pandas"],

wait=True

)

executor = cc.CloudExecutor(

env="fashionmnist",

num_cpus=2,

memory="8GB",

time_limit="1 hours"

)

gpu_executor = cc.CloudExecutor(

env="fashionmnist", num_cpus=2,

memory="8GB", time_limit="1 hours",

num_gpus=1, gpu_type="v100",

)

@ct.electron(executor=executor)

def data_loader(

batch_size: int, train: bool, download: bool = True,

data_dir: str = "/tmp/", shuffle: bool = True,

):

data = FashionMNIST(

root=data_dir, train=train, download=download, transform=ToTensor()

)

return DataLoader(data, batch_size=batch_size, shuffle=shuffle)

@ct.electron(executor=gpu_executor)

def train_model(dataloader, num_epochs: int, learning_rate: float):

model = SimpleNN()

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(model.parameters(), lr=learning_rate)

losses = []

# Training the model

for epoch in range(num_epochs):

model.train()

running_loss = 0.0

for inputs, labels in dataloader:

optimizer.zero_grad()

outputs = model(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

running_loss += loss.item()

print(

f"{epoch+1}/{num_epochs}: loss {running_loss/len(dataloader)}"

)

losses.append(running_loss / len(dataloader))

# save model to volume

model_folder = Path("/volumes") / "models"

model_folder.mkdir(exist_ok=True)

model_file_path = model_folder / "model.pth"

torch.save(model.state_dict(), model_file_path)

return model_file_path, losses

@ct.electron(executor=executor)

def test_model(dataloader, model_file_path):

# load model from volume

model = SimpleNN()

model.load_state_dict(torch.load(model_file_path))

model.eval()

correct = 0

total = 0

with torch.no_grad():

for inputs, labels in dataloader:

outputs = model(inputs)

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

accuracy = correct / total

print(f"Accuracy on the test set: {accuracy:.4f}")

return accuracy

@ct.electron(executor=executor)

def plot_losses(losses):

return px.line(

y=losses, title="Training Losses", labels={"y": "Loss", "x": "Epoch"}

)

@ct.lattice(workflow_executor=executor, executor=executor)

def workflow(train_batch_size: int, test_batch_size: int, epoch_num: int = 5):

train_loader = data_loader(train_batch_size, train=True)

test_loader = data_loader(test_batch_size, train=False, shuffle=False)

model_path, losses = train_model(

train_loader, num_epochs=epoch_num, learning_rate=0.001

)

accuracy = test_model(test_loader, model_path)

fig = plot_losses(losses)

return accuracy, fig

volume = cc.volume("/models")

dispatch_id = cc.dispatch(workflow, volume=volume)(

train_batch_size=256, test_batch_size=64, epoch_num=50

)

result = cc.get_result(dispatch_id, wait=True)

result.result.load()

accuracy, fig = result.result.value

fig

We have used the following parts of covalent cloud in this tutorial: