Fine tuning and validating LLMs

Fine-tuning LLMs can enhance performance and produce more accurate, contextually relevant outputs.

In this tutorial, we'll fine-tune an LLM for summarization on Covalent Cloud using Hugging Face libraries for LoRA.

This tutorial costs less than $1 to run on Covalent Cloud.

Getting started

First, install the Covalent Cloud SDK.

pip install -U covalent-cloud

We'll need the following packages in this tutorial to fine-tune and validate the LLM. Installing these locally is not always necessary (we can write imports inside a decorated function's body to avoid local dependencies), but it does help to make development more natural.

accelerate==0.31.0

bitsandbytes==0.42.0

datasets==2.16.1

evaluate==0.4.2

peft==0.8.2

py7zr==0.21.0

rouge-score==0.1.2

tensorboardX==2.6.2.2

torch==2.2.0

transformers==4.37.2

Imports

Having installed the above packages, let's import them with the cell below.

import covalent as ct

import covalent_cloud as cc

import evaluate

import numpy as np

import pandas as pd

import torch

from datasets import load_dataset, load_from_disk

from peft import (LoraConfig, PeftConfig, PeftModel, TaskType, get_peft_model,

prepare_model_for_int8_training)

from transformers import (AutoModelForSeq2SeqLM, AutoTokenizer,

DataCollatorForSeq2Seq, Seq2SeqTrainer,

Seq2SeqTrainingArguments)

Be sure to copy your API key from the Covalent Cloud UI and paste it in the cell below.

cc.save_api_key("YOUR-API-KEY")

Cloud environment

The cloud environment for this tutorial is created in the cell below.

It's good practice to match the package versions here with the local environment where we develop the workflow.

cc.create_env(

name="finetune-samsum-acc",

pip=[

"accelerate==0.31.0",

"bitsandbytes==0.42.0",

"datasets==2.16.1",

"evaluate==0.4.2",

"peft==0.8.2",

"py7zr==0.21.0",

"rouge-score==0.1.2",

"tensorboardX==2.6.2.2",

"torch==2.2.0",

"transformers==4.37.2",

],

wait=True

)

Environment Already Exists.

Defining compute resources

We'll use two sets of compute resources in this tutorial, one for GPU-accelerated tasks and another for lighter CPU-bound tasks. As usual, these are represented by CloudExecutor instances.

cpu_executor = cc.CloudExecutor(

env="finetune-samsum-acc",

num_cpus=2,

memory="32GB",

time_limit="01:00:00" # 1 hour

)

gpu_executor = cc.CloudExecutor(

env="finetune-samsum-acc",

num_cpus=2,

num_gpus=1,

gpu_type="t4",

memory="32GB",

time_limit="01:00:00"

)

Cloud storage volume

We also create a cloud volume for workflow-wide storage among our tasks.

finetune_volume = cc.volume("finetune")

Workflow tasks

Loading and pre-processing data

To fine-tune our language model, we'll load the SAMSum dataset, which contains 16k summaries of conversations.

Notice that this loading task uses the cpu_executor, since we don't really need GPUs for this.

@ct.electron(executor=cpu_executor)

def load_data():

"""Load SAMSum dataset from the hub and save it to disk."""

parent_folder = finetune_volume / "datasets"

ds_cache_dir = parent_folder / "cache"

save_dir = parent_folder / "samsum"

ds_cache_dir.mkdir(exist_ok=True, parents=True)

save_dir.mkdir(exist_ok=True, parents=True)

dataset = load_dataset("samsum", cache_dir=ds_cache_dir)

# Save dataset to disk

dataset.save_to_disk(save_dir)

return save_dir

This next task will do some pre-processing on the dataset.

@ct.electron(executor=cpu_executor)

def preprocess_dataset(

dataset_path, tokenizer_path, max_source_length=255, max_target_length=90

):

"""Preprocess the dataset for finetuning."""

dataset = load_dataset("samsum", data_dir=dataset_path)

tokenizer = AutoTokenizer.from_pretrained(tokenizer_path)

def preprocess_function(sample):

inputs = [f"summarize: {item}" for item in sample["dialogue"]]

model_inputs = tokenizer(

inputs, max_length=max_source_length, padding="max_length", truncation=True

)

labels = tokenizer(

sample["summary"], max_length=max_target_length, padding="max_length", truncation=True

)

labels["input_ids"] = [

[lab if lab != tokenizer.pad_token_id else -100 for lab in label]

for label in labels["input_ids"]

]

model_inputs["labels"] = labels["input_ids"]

return model_inputs

dataset_transformed = dataset.map(

preprocess_function, batched=True,

remove_columns=["dialogue", "summary", "id"]

)

preprocessed_dataset_path = finetune_volume / "preprocessed"

# Save dataset to disk

dataset_transformed.save_to_disk(preprocessed_dataset_path)

return preprocessed_dataset_path

Loading the model and tokenizer

Here, we load (download) the pre-trained tokenizer and save it to the finetune_volume created earlier.

@ct.electron(executor=cpu_executor)

def load_tokenizer(model_id="google/flan-t5-small"):

"""Load tokenizer and save it to disk."""

tokenizer = AutoTokenizer.from_pretrained(model_id)

tokenizer_folder = finetune_volume / "tokenizer"

tokenizer_folder.mkdir(exist_ok=True, parents=True)

# Save tokenizer to model folder

tokenizer.save_pretrained(tokenizer_folder)

return tokenizer_folder

We'll do something similar for the language model, as well as adding a LoRA configuration before saving. Later, we'll load the configured model from the model_folder returned by this task.

@ct.electron(executor=cpu_executor)

def load_model_for_finetuning(model_id="google/flan-t5-small"):

"""Load model for LoRA finetuning and save it to disk."""

lora_config = LoraConfig(

r=16,

lora_alpha=32,

target_modules=["q", "v"],

lora_dropout=0.05,

bias="none",

task_type=TaskType.SEQ_2_SEQ_LM

)

model = AutoModelForSeq2SeqLM.from_pretrained(model_id)

# Load and configure model

model = prepare_model_for_int8_training(model)

model = get_peft_model(model, lora_config)

model.print_trainable_parameters()

# Save the model

model_folder = finetune_volume / "pretrained"

model_folder.mkdir(exist_ok=True, parents=True)

model.save_pretrained(model_folder)

return model_folder

The fine-tuning task

This is the first compute-intensive task. Here we fine-tune the model by training the LoRA layers on the SAMSum dataset. Using our gpu_executor gives this task access to a T4 GPU (as well as 2 CPUs, 8 GB of RAM, etc.).

@ct.electron(executor=gpu_executor)

def train_model(model_path, tokenizer_path, dataset_path):

"""Run GPU-accelerated finetuning on the dataset."""

# Load dataset

train_dataset = load_from_disk(dataset_path)['train']

# We want to ignore tokenizer pad token in the loss

label_pad_token_id = -100

# Output directory

output_dir = finetune_volume / "finetuned"

# Load tokenizer and model from disk

tokenizer = AutoTokenizer.from_pretrained(tokenizer_path)

config = PeftConfig.from_pretrained(model_path)

model = AutoModelForSeq2SeqLM.from_pretrained(

config.base_model_name_or_path, load_in_8bit=True

)

model = PeftModel.from_pretrained(model, model_path, is_trainable=True)

# Data collator

data_collator = DataCollatorForSeq2Seq(

tokenizer,

model=model,

label_pad_token_id=label_pad_token_id,

pad_to_multiple_of=8

)

# Define training args

training_args = Seq2SeqTrainingArguments(

output_dir=output_dir,

auto_find_batch_size=True,

learning_rate=1e-3, # higher learning rate

num_train_epochs=5,

logging_dir=f"{output_dir}/logs",

logging_strategy="steps",

logging_steps=500,

save_strategy="no",

report_to="tensorboard",

)

# Create Trainer instance

trainer = Seq2SeqTrainer(

model=model,

args=training_args,

data_collator=data_collator,

train_dataset=train_dataset,

)

model.config.use_cache = False

trainer.train()

# Save model

model_folder = finetune_volume / "finetuned"

trainer.model.save_pretrained(model_folder)

tokenizer.save_pretrained(model_folder)

return model_folder

Evaluation

Let's also define a task that uses a ROUGE metric to evaluate the model. We'll run this before and after fine-tuning.

@ct.electron(executor=gpu_executor)

def evaluate_peft_model(dataset_path, model_folder, max_target_length=50):

"""Evaluate the model using ROUGE metric."""

test_dataset = load_from_disk(dataset_path)['test']

# Load configurations, model, and tokenizer

config = PeftConfig.from_pretrained(model_folder)

model = AutoModelForSeq2SeqLM.from_pretrained(

config.base_model_name_or_path, load_in_8bit=True

)

tokenizer = AutoTokenizer.from_pretrained(config.base_model_name_or_path)

model = PeftModel.from_pretrained(model, model_folder)

model.eval()

def evaluate_example(example):

input_ids = torch.tensor(

example["input_ids"], dtype=torch.int32).unsqueeze(0)

outputs = model.generate(

input_ids=input_ids, do_sample=True,

top_p=0.9, max_new_tokens=max_target_length

)

prediction = tokenizer.decode(outputs[0], skip_special_tokens=True)

labels = np.where(

np.array(example['labels']) != -100,

np.array(example['labels']),

tokenizer.pad_token_id

)

reference = tokenizer.decode(labels, skip_special_tokens=True)

return prediction, reference

# Generate predictions and references

predictions, references = zip(

*(evaluate_example(example) for example in test_dataset)

)

# Evaluate using ROUGE metric

rouge_metric = evaluate.load("rouge")

rouge_score = rouge_metric.compute(

predictions=predictions, references=references, use_stemmer=True

)

return rouge_score, list(zip(predictions, references))

Covalent Workflow

Now, to create the workflow, we'll put everything together inside a "main" function that connects all the tasks above.

@ct.lattice(workflow_executor=cpu_executor, executor=cpu_executor)

def workflow():

"""Overall finetuning and evaluation workflow"""

dataset_path = load_data()

tokenizer_path = load_tokenizer()

tokenized_dataset_path = preprocess_dataset(dataset_path, tokenizer_path)

pretrained_model_path = load_model_for_finetuning()

# Run evaluation before finetuning

rouge_before, predictions_and_references_before = evaluate_peft_model(

tokenized_dataset_path, pretrained_model_path,

)

# Run finetuning

finetuned_model_folder = train_model(

pretrained_model_path, tokenizer_path, tokenized_dataset_path

)

# Run evaluation after finetuning

rouge_after, predictions_and_references_after = evaluate_peft_model(

tokenized_dataset_path, finetuned_model_folder

)

return {

"rouge_before": rouge_before,

"rouge_after": rouge_after,

"predictions_and_references_before": predictions_and_references_before,

"predictions_and_references_after": predictions_and_references_after

}

We can now execute the workflow by running the following cell.

dispatch_id = cc.dispatch(workflow, volume=finetune_volume)()

Here's how we query the result. Using wait=True here makes the function block until the workflow is done.

result = cc.get_result(dispatch_id, wait=True)

result.result.load()

result_dict = result.result.value

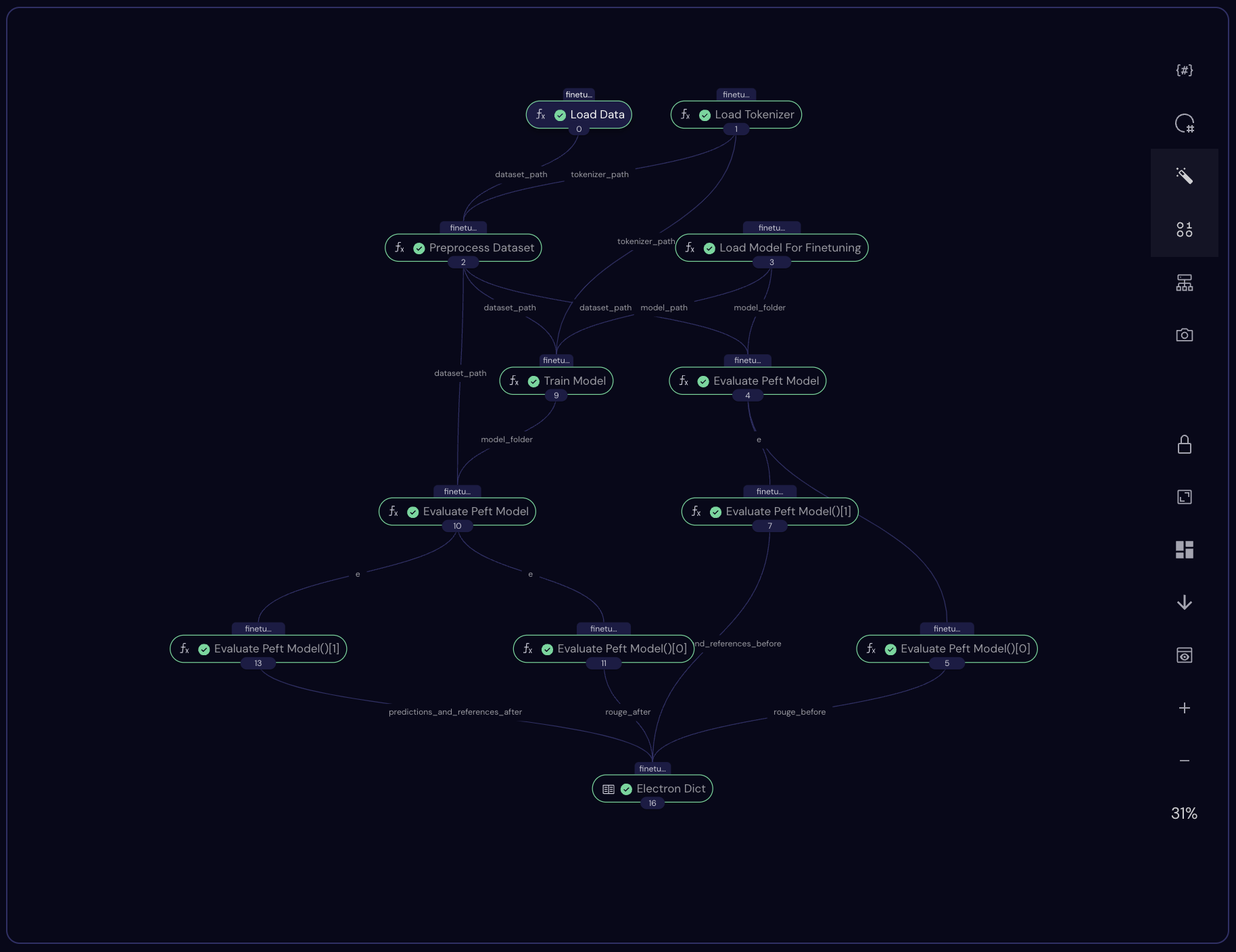

The completed workflow graph in the Covalent Cloud UI should look something like this:

Analyzing results

Let's print the ROUGE scores before and after fine-tuning.

rouge_average_before = np.round(np.average(list(result_dict['rouge_before'].values())), 2)

rouge_average_after = np.round(np.average(list(result_dict['rouge_after'].values())), 2)

difference = np.round((rouge_average_after - rouge_average_before), 2) * 100

print(

f'Rouge score with pretrained model was {rouge_average_before} '

f'and after fine-tuning' f'is {rouge_average_after}, difference is {difference:.1f}%'

)

Rouge score with pretrained model was 0.28 and after fine-tuningis 0.31, difference is 3.0%

Our result also contains some generated summaries from the model evaluations. We can compare these briefly to see if the model has improved.

before_examples, gold_examples = zip(*result_dict['predictions_and_references_before'])

after_examples, gold_examples = zip(*result_dict['predictions_and_references_after'])

data = []

for before, after, gold in zip(before_examples, after_examples, gold_examples):

if before != after and len(gold) < 50:

data.append((before, after, gold))

pd.set_option('display.max_colwidth', 90)

pd.DataFrame(data, columns=['before finetuning', 'after finetuning', 'gold']).head(5)

| before finetuning | after finetuning | gold | |

|---|---|---|---|

| 0 | Ethan is doing some sums up Scott. | Toby, Scott, Toby and Marshall agree that Scott is trying to get him to act like it. | Ethan, Toby and Marshall are making fun of Scott. |

| 1 | Mark told Anne he's 30 today and he saw his passport. He's 40. | Anne is shocked to see Mark's passport's clearance. He was telling Irene she saw Mark'... | Mark lied to Anne about his age. Mark is 40. |

| 2 | Selah is not sure the phone number of that person is off. | Selah can't see the phone number of the person. | Selah called a person that did not pick up. |

| 3 | Jack sent May drinks and drinks, without question. | Jack and May will have drinks today. | Jack and May will drink cocktails later. |

| 4 | Janice's son has asked her to get him a hamster for his birthday. Martina doesn't want... | Janice's son has been asking Martina to buy a hamster for his birthday. | Martina advises against getting a hamster. |

Conclusion

In this tutorial, we used Hugging Face libraries to fine tune a small LLM on a dataset of conversations and summaries, all within the Covalent Cloud platform. A modest improvement in the ROUGE metric was observed after fine-tuning.

The full code can be found below:

Full Code

import covalent as ct

import covalent_cloud as cc

import evaluate

import numpy as np

import pandas as pd

import torch

from datasets import load_dataset, load_from_disk

from peft import (LoraConfig, PeftConfig, PeftModel, TaskType, get_peft_model,

prepare_model_for_int8_training)

from transformers import (AutoModelForSeq2SeqLM, AutoTokenizer,

DataCollatorForSeq2Seq, Seq2SeqTrainer,

Seq2SeqTrainingArguments)

cc.save_api_key("YOUR-API-KEY")

cc.create_env(

name="finetune-samsum-acc",

pip=[

"accelerate==0.31.0",

"bitsandbytes==0.42.0",

"datasets==2.16.1",

"evaluate==0.4.2",

"peft==0.8.2",

"py7zr==0.21.0",

"rouge-score==0.1.2",

"tensorboardX==2.6.2.2",

"torch==2.2.0",

"transformers==4.37.2",

],

wait=True

)

finetune_volume = cc.volume("finetune")

cpu_executor = cc.CloudExecutor(

env="finetune-samsum-acc",

num_cpus=2,

memory="32GB",

time_limit="01:00:00" # 1 hour

)

gpu_executor = cc.CloudExecutor(

env="finetune-samsum-acc",

num_cpus=2,

num_gpus=1,

gpu_type="t4",

memory="32GB",

time_limit="01:00:00"

)

@ct.electron(executor=cpu_executor)

def load_data():

"""Load SAMSum dataset from the hub and save it to disk."""

parent_folder = finetune_volume / "datasets"

ds_cache_dir = parent_folder / "cache"

save_dir = parent_folder / "samsum"

ds_cache_dir.mkdir(exist_ok=True, parents=True)

save_dir.mkdir(exist_ok=True, parents=True)

dataset = load_dataset("samsum", cache_dir=ds_cache_dir)

# Save dataset to disk

dataset.save_to_disk(save_dir)

return save_dir

@ct.electron(executor=cpu_executor)

def load_tokenizer(model_id="google/flan-t5-small"):

"""Load tokenizer from the model and save it to disk."""

tokenizer = AutoTokenizer.from_pretrained(model_id)

tokenizer_folder = finetune_volume / "tokenizer"

tokenizer_folder.mkdir(exist_ok=True, parents=True)

# Save tokenizer to model folder

tokenizer.save_pretrained(tokenizer_folder)

return tokenizer_folder

@ct.electron(executor=cpu_executor)

def preprocess_dataset(

dataset_path, tokenizer_path, max_source_length=255, max_target_length=90

):

"""Preprocess the dataset for finetuning."""

dataset = load_dataset("samsum", data_dir=dataset_path)

tokenizer = AutoTokenizer.from_pretrained(tokenizer_path)

def preprocess_function(sample):

inputs = [f"summarize: {item}" for item in sample["dialogue"]]

model_inputs = tokenizer(

inputs, max_length=max_source_length, padding="max_length", truncation=True

)

labels = tokenizer(

sample["summary"], max_length=max_target_length, padding="max_length", truncation=True

)

labels["input_ids"] = [

[lab if lab != tokenizer.pad_token_id else -100 for lab in label]

for label in labels["input_ids"]

]

model_inputs["labels"] = labels["input_ids"]

return model_inputs

dataset_transformed = dataset.map(

preprocess_function, batched=True,

remove_columns=["dialogue", "summary", "id"]

)

preprocessed_dataset_path = finetune_volume / "preprocessed"

# Save dataset to disk

dataset_transformed.save_to_disk(preprocessed_dataset_path)

return preprocessed_dataset_path

@ct.electron(executor=cpu_executor)

def load_model_for_finetuning(model_id="google/flan-t5-small"):

"""Load model for LoRA finetuning and save it to disk."""

lora_config = LoraConfig(

r=16,

lora_alpha=32,

target_modules=["q", "v"],

lora_dropout=0.05,

bias="none",

task_type=TaskType.SEQ_2_SEQ_LM

)

model = AutoModelForSeq2SeqLM.from_pretrained(model_id)

# Load and configure model

model = prepare_model_for_int8_training(model)

model = get_peft_model(model, lora_config)

model.print_trainable_parameters()

# Save the model

model_folder = finetune_volume / "pretrained"

model_folder.mkdir(exist_ok=True, parents=True)

model.save_pretrained(model_folder)

return model_folder

@ct.electron(executor=gpu_executor)

def train_model(model_path, tokenizer_path, dataset_path):

"""Run GPU-accelerated finetuning on the dataset."""

# Load dataset

train_dataset = load_from_disk(dataset_path)['train']

# We want to ignore tokenizer pad token in the loss

label_pad_token_id = -100

# Output directory

output_dir = finetune_volume / "finetuned"

# Load tokenizer and model from disk

tokenizer = AutoTokenizer.from_pretrained(tokenizer_path)

config = PeftConfig.from_pretrained(model_path)

model = AutoModelForSeq2SeqLM.from_pretrained(

config.base_model_name_or_path, load_in_8bit=True

)

model = PeftModel.from_pretrained(model, model_path, is_trainable=True)

# Data collator

data_collator = DataCollatorForSeq2Seq(

tokenizer,

model=model,

label_pad_token_id=label_pad_token_id,

pad_to_multiple_of=8

)

# Define training args

training_args = Seq2SeqTrainingArguments(

output_dir=output_dir,

auto_find_batch_size=True,

learning_rate=1e-3, # higher learning rate

num_train_epochs=5,

logging_dir=f"{output_dir}/logs",

logging_strategy="steps",

logging_steps=500,

save_strategy="no",

report_to="tensorboard",

)

# Create Trainer instance

trainer = Seq2SeqTrainer(

model=model,

args=training_args,

data_collator=data_collator,

train_dataset=train_dataset,

)

model.config.use_cache = False

trainer.train()

# Save model

model_folder = finetune_volume / "finetuned"

trainer.model.save_pretrained(model_folder)

tokenizer.save_pretrained(model_folder)

return model_folder

@ct.electron(executor=gpu_executor)

def evaluate_peft_model(dataset_path, model_folder, max_target_length=50):

"""Evaluate the model using ROUGE metric."""

test_dataset = load_from_disk(dataset_path)['test']

# Load configurations, model, and tokenizer

config = PeftConfig.from_pretrained(model_folder)

model = AutoModelForSeq2SeqLM.from_pretrained(

config.base_model_name_or_path, load_in_8bit=True

)

tokenizer = AutoTokenizer.from_pretrained(config.base_model_name_or_path)

model = PeftModel.from_pretrained(model, model_folder)

model.eval()

def evaluate_example(example):

input_ids = torch.tensor(

example["input_ids"], dtype=torch.int32).unsqueeze(0)

outputs = model.generate(

input_ids=input_ids, do_sample=True,

top_p=0.9, max_new_tokens=max_target_length

)

prediction = tokenizer.decode(outputs[0], skip_special_tokens=True)

labels = np.where(

np.array(example['labels']) != -100,

np.array(example['labels']),

tokenizer.pad_token_id

)

reference = tokenizer.decode(labels, skip_special_tokens=True)

return prediction, reference

# Generate predictions and references

predictions, references = zip(

*(evaluate_example(example) for example in test_dataset))

# Evaluate using ROUGE metric

rouge_metric = evaluate.load("rouge")

rouge_score = rouge_metric.compute(

predictions=predictions, references=references, use_stemmer=True)

return rouge_score, list(zip(predictions, references))

@ct.lattice(workflow_executor=cpu_executor, executor=cpu_executor)

def workflow():

"""Overall finetuning and evaluation workflow"""

dataset_path = load_data()

tokenizer_path = load_tokenizer()

tokenized_dataset_path = preprocess_dataset(dataset_path, tokenizer_path)

pretrained_model_path = load_model_for_finetuning()

# Run evaluation before finetuning

rouge_before, predictions_and_references_before = evaluate_peft_model(

tokenized_dataset_path, pretrained_model_path,

)

# Run finetuning

finetuned_model_folder = train_model(

pretrained_model_path, tokenizer_path, tokenized_dataset_path

)

# Run evaluation after finetuning

rouge_after, predictions_and_references_after = evaluate_peft_model(

tokenized_dataset_path, finetuned_model_folder

)

return {

"rouge_before": rouge_before,

"rouge_after": rouge_after,

"predictions_and_references_before": predictions_and_references_before,

"predictions_and_references_after": predictions_and_references_after

}

dispatch_id = cc.dispatch(workflow, volume=finetune_volume)()

result = cc.get_result(dispatch_id, wait=True)

result.result.load()

result_dict = result.result.value

rouge_average_before = np.round(np.average(list(result_dict['rouge_before'].values())), 2)

rouge_average_after = np.round(np.average(list(result_dict['rouge_after'].values())), 2)

difference = np.round((rouge_average_after - rouge_average_before), 2) * 100

print(

f'Rouge score with pretrained model was {rouge_average_before} '

f'and after finetuning' f'is {rouge_average_after}, difference is {difference}%'

)

before_examples, gold_examples = zip(*result_dict['predictions_and_references_before'])

after_examples, gold_examples = zip(*result_dict['predictions_and_references_after'])

data = []

for before, after, gold in zip(before_examples, after_examples, gold_examples):

if before != after and len(gold) < 50:

data.append((before, after, gold))

pd.DataFrame(data, columns=['before finetuning', 'after finetuning', 'gold']).head(5)